import plotly.express as px

import polars as pl

import polars.selectors as cs

import palmerpenguins

import nycflights1305 | Introduction to Data Science

Python data workflows with Polars and Plotly

1 Overview

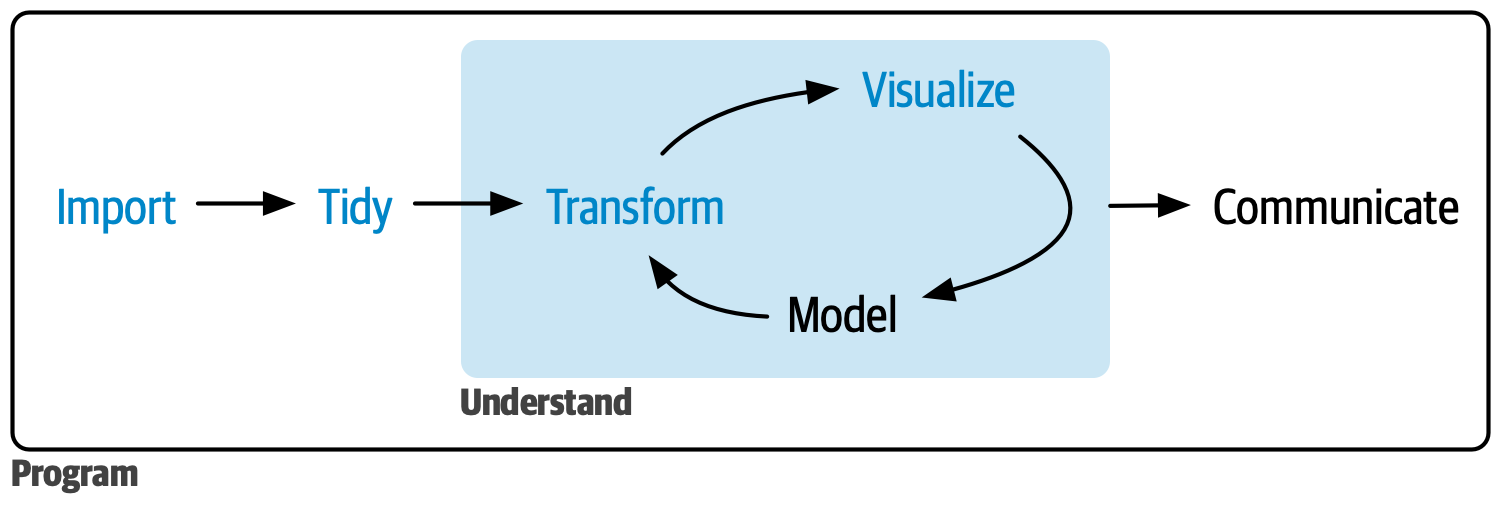

In this beginning chapter, we will cover the fundamentals of data analysis in the form of importing, tidying, transforming, and visualizing data, as shown below:

By the end of this module, you will be able to:

- Import data from various file formats into Python using Polars

- Clean and reshape datasets to facilitate analysis

- Transform data through filtering, sorting, and creating new variables

- Group and aggregate data to identify patterns and trends

- Visualize distributions and relationships using Plotly Express

- Apply tidy data principles to structure datasets effectively

1.1 Initialization

At this point, you will need to install a few more Python packages:

terminal

uv add polars[all] plotly[express] statsmodels palmerpenguins nycflights13Once installed, import the following:

2 Data visualization

Python has several systems for making graphs, but we will be focusing on Plotly, specifically Plotly Express. Plotly Express contains functions that can create entire plots at once, and makes it easy to create most common figures.

This section will teach you how to visualize your data using Plotly Express, walking through visualizing distributions of single variables, as well as relationships between two or more variables.

2.1 The

penguins data frame

The dataset we will be working with is a commonly used one,

affectionately referred to as the Palmer Penguins, which includes body

measurements for penguins on three islands in the Palmer Archipelago. A

data frame is a rectangular collection of variables (in

the columns) and observations (in the rows). penguins

contains 344 observations collected and made available by Dr. Kristen

Gorman and the Palmer Station, Antarctica.

Let’s define some term:

- A variable is a quantity, quality, or property that you can measure.

- A value is the state of a variable when you measure it. The value of a variable may change from measurement to measurement.

- An observation is a set of measurements made under similar conditions (you usually make all of the measurements in an observation at the same time and on the same object). An observation will contain several values, each associated with a different variable. We’ll sometimes refer to an observation as a data point.

- Tabular data is a set of values, each associated with a variable and an observation. Tabular data is tidy if each value is placed in its own “cell”, each variable in its own column, and each observation in its own row.

In this context, a variable refers to an attribute of all the penguins, and an observation refers to all the attributes of a single penguin.

We will use palmerpenguins package to get the

penguins data, and convert it to a polars data

frame:

penguins = pl.from_pandas(palmerpenguins.load_penguins())The reason we convert to a Polars data frame is because we want to use the tools and methods that come with Polars:

type(penguins)polars.dataframe.frame.DataFrameDepending on what tool/IDE you’re using Python with, just having the

variable name (penguins) as the last line will print a

formatted view of the data. If not, you can also use

print() to use Polars’ native formatting:

penguins| species | island | bill_length_mm | bill_depth_mm | flipper_length_mm | body_mass_g | sex | year |

|---|---|---|---|---|---|---|---|

| str | str | f64 | f64 | f64 | f64 | str | i64 |

| "Adelie" | "Torgersen" | 39.1 | 18.7 | 181.0 | 3750.0 | "male" | 2007 |

| "Adelie" | "Torgersen" | 39.5 | 17.4 | 186.0 | 3800.0 | "female" | 2007 |

| "Adelie" | "Torgersen" | 40.3 | 18.0 | 195.0 | 3250.0 | "female" | 2007 |

| "Adelie" | "Torgersen" | null | null | null | null | null | 2007 |

| … | … | … | … | … | … | … | … |

| "Chinstrap" | "Dream" | 43.5 | 18.1 | 202.0 | 3400.0 | "female" | 2009 |

| "Chinstrap" | "Dream" | 49.6 | 18.2 | 193.0 | 3775.0 | "male" | 2009 |

| "Chinstrap" | "Dream" | 50.8 | 19.0 | 210.0 | 4100.0 | "male" | 2009 |

| "Chinstrap" | "Dream" | 50.2 | 18.7 | 198.0 | 3775.0 | "female" | 2009 |

print(penguins)shape: (344, 8)

┌───────────┬───────────┬──────────────┬──────────────┬──────────────┬─────────────┬────────┬──────┐

│ species ┆ island ┆ bill_length_ ┆ bill_depth_m ┆ flipper_leng ┆ body_mass_g ┆ sex ┆ year │

│ --- ┆ --- ┆ mm ┆ m ┆ th_mm ┆ --- ┆ --- ┆ --- │

│ str ┆ str ┆ --- ┆ --- ┆ --- ┆ f64 ┆ str ┆ i64 │

│ ┆ ┆ f64 ┆ f64 ┆ f64 ┆ ┆ ┆ │

╞═══════════╪═══════════╪══════════════╪══════════════╪══════════════╪═════════════╪════════╪══════╡

│ Adelie ┆ Torgersen ┆ 39.1 ┆ 18.7 ┆ 181.0 ┆ 3750.0 ┆ male ┆ 2007 │

│ Adelie ┆ Torgersen ┆ 39.5 ┆ 17.4 ┆ 186.0 ┆ 3800.0 ┆ female ┆ 2007 │

│ Adelie ┆ Torgersen ┆ 40.3 ┆ 18.0 ┆ 195.0 ┆ 3250.0 ┆ female ┆ 2007 │

│ Adelie ┆ Torgersen ┆ null ┆ null ┆ null ┆ null ┆ null ┆ 2007 │

│ … ┆ … ┆ … ┆ … ┆ … ┆ … ┆ … ┆ … │

│ Chinstrap ┆ Dream ┆ 43.5 ┆ 18.1 ┆ 202.0 ┆ 3400.0 ┆ female ┆ 2009 │

│ Chinstrap ┆ Dream ┆ 49.6 ┆ 18.2 ┆ 193.0 ┆ 3775.0 ┆ male ┆ 2009 │

│ Chinstrap ┆ Dream ┆ 50.8 ┆ 19.0 ┆ 210.0 ┆ 4100.0 ┆ male ┆ 2009 │

│ Chinstrap ┆ Dream ┆ 50.2 ┆ 18.7 ┆ 198.0 ┆ 3775.0 ┆ female ┆ 2009 │

└───────────┴───────────┴──────────────┴──────────────┴──────────────┴─────────────┴────────┴──────┘This data frame contains 8 columns. For an alternative view, use

DataFrame.glimpse(), which is helpful for wide tables that

have many columns:

penguins.glimpse()Rows: 344

Columns: 8

$ species <str> 'Adelie', 'Adelie', 'Adelie', 'Adelie', 'Adelie', 'Adelie', 'Adelie', 'Adelie', 'Adelie', 'Adelie'

$ island <str> 'Torgersen', 'Torgersen', 'Torgersen', 'Torgersen', 'Torgersen', 'Torgersen', 'Torgersen', 'Torgersen', 'Torgersen', 'Torgersen'

$ bill_length_mm <f64> 39.1, 39.5, 40.3, None, 36.7, 39.3, 38.9, 39.2, 34.1, 42.0

$ bill_depth_mm <f64> 18.7, 17.4, 18.0, None, 19.3, 20.6, 17.8, 19.6, 18.1, 20.2

$ flipper_length_mm <f64> 181.0, 186.0, 195.0, None, 193.0, 190.0, 181.0, 195.0, 193.0, 190.0

$ body_mass_g <f64> 3750.0, 3800.0, 3250.0, None, 3450.0, 3650.0, 3625.0, 4675.0, 3475.0, 4250.0

$ sex <str> 'male', 'female', 'female', None, 'female', 'male', 'female', 'male', None, None

$ year <i64> 2007, 2007, 2007, 2007, 2007, 2007, 2007, 2007, 2007, 2007

Among these variables are:

species: a penguin’s species (Adelie, Chinstrap, or Gentoo).flipper_length_mm: length of a penguin’s flipper, in millimeters.body_mass_g: body mass of a penguin, in grams.

2.2 First visualization

Our goal is to recreate the following visual that displays the relationship between flipper lengths and body masses of these penguins, taking into consideration the species of the penguin.

2.3 Using Plotly Express

With Plotly Express, you begin a plot by calling a plotting function

from the module, commonly referred to as px. You can then

add arguments to your plot function for more customization.

At it’s most basic form, the plotting function creates a blank canvas with a grid since we’ve given it no data.

px.scatter()Next, we need to actually provide data, along with the appropriate number of variables depending on the type of plot we are trying to create.

px.scatter(data_frame=penguins, x="flipper_length_mm", y="body_mass_g")px.scatter() creates a scatter plot, and

we will learn many more plot types through out the course. You can learn

more about the different plots Plotly Express offers at their gallery.

This doesn’t match our “final goal” yet, but using this plot we can start answering the question that motivated our exploration: “What does the relationship between flipper length and body mass look like?” The relationship appears to be positive (as flipper length increases, so does body mass), fairly linear (the points are clustered around a line instead of a curve), and moderately strong (there isn’t too much scatter around such a line). Penguins with longer flippers are generally larger in terms of their body mass.

Before we go further, I want to point out that this dataset has some

missing values for flipper_length_mm and

body_mass_g, but Plotly does not warn you about this when

creating the plot. If one and/or other variable is missing data, we

cannot plot that.

2.4 Adding aesthetics and layers

Scatter plots are useful for displaying the relationship between two numerical variables, but it’s always a good idea to be skeptical of any apparent relationship between two variables and ask if there may be other variables that explain or change the nature of this apparent relationship. For example, does the relationship between flipper length and body mass differ by species? Let’s incorporate species into our plot and see if this reveals any additional insights into the apparent relationship between these variables. We will do this by representing species with different colored points.

To achieve this, we will use some of the other arguments that

px.scatter() provides for us, like color:

px.scatter(

data_frame=penguins,

x="flipper_length_mm",

y="body_mass_g",

color="species"

)When a categorical variable is mapped to an aesthetic, Plotly will automatically assign a unique value of the aesthetic (here, a unique color) to each unique level of the variable (each of the three species), a process known as scaling. Plotly will also add a legend that explains which value correspond to which levels.

Now let’s add another layer, a trendline displaying the relationship

between body mass and flipper length. px.scatter() has an

argument for this, trendline, and a couple other arguments

that modify its behavior. Specifically, we want to draw a line of best

fit using Ordinary Least Squares (ols). You can see the other options,

and more info about this plotting function with

?px.scatter() or in the online documentation.

px.scatter(

data_frame=penguins,

x="flipper_length_mm",

y="body_mass_g",

color="species",

trendline="ols"

)We’ve added lines, but this plot doesn’t look like our final goal,

which only has one line for the entire dataset, opposed to separate

lines for each of the penguin species. px.scatter() has an

argument, trendline_scope, which controls how the trendline

is drawn when there are groups, in this case created when we used

color="species". The default for

trendline_scope is "trace", which draws a line

per color, symbol, facet, etc., and "overall", which

computes one trendline for the entire dataset,a nd replicates across all

facets.

px.scatter(

data_frame=penguins,

x="flipper_length_mm",

y="body_mass_g",

color="species",

trendline="ols",

trendline_scope="overall"

)Now we have something that is very close to our final plot, thought it’s not there yet. We still need to use different shapes for each species and improve the labels.

It’s generally not a good idea to represent information only using

colors on a plot as people perceive colors differently do to color

blindness or other color vision difference. px.scatter()

allows us to control the shapes of the dots using the

symbol argument.

px.scatter(

penguins,

x="flipper_length_mm",

y="body_mass_g",

color="species",

symbol="species",

trendline="ols",

trendline_scope="overall",

)Note that the legend is automatically updated to reflect the different shapes of the points as well.

Finally, we can use title, subtitle, and

labels arguments to update our labels. title

and subtitle just take a string, adding labels are bit more

advanced. labels takes a dictionary with key:value combos

for each of the labels on the plot that you would like to change. In our

plot, we want to update the labels for the x-axis, y-axis, and the

legend, but we refer to them by their current label, not their

position:

px.scatter(

penguins,

x="flipper_length_mm",

y="body_mass_g",

color="species",

symbol="species",

trendline="ols",

trendline_scope="overall",

title="Body mass and flipper length",

subtitle="Dimensions for Adelie, Chinstrap, and Gentoo Penguins",

labels={

"species":"Species",

"body_mass_g":"Body mass (g)",

"flipper_length_mm":"Flipper length (mm)"

}

)Now we have our final plot. If you haven’t noticed already, Plotly creates interactive plots, you can hover over certain data points to see their values, use the legend as a filter, and so on. In the top right of every plot, you will see a menu with some options.

2.5 Visualizing distributions

How you visualize the distribution of a variable depends on the type of the variable: categorical or numerical.

2.5.1 A categorical variable

A value is categorical if it can only take one of a

small set of values. To examine the distribution of a categorical

variable, you can use a bar chart. The height

of the bars displays how many observations occurred with each

x value.

px.bar(penguins, x="species")px.bar() will result in one rectangle drawn per

row of input, which can result in the striped look above. To

combine these rectangles into on color per position, we can

pre-calculate the count as and use it as the

y value:

# we'll learn more about this Polars code later

penguins_count = penguins.group_by("species").len("count")

print(penguins_count)

px.bar(penguins_count, x="species", y="count")shape: (3, 2)

┌───────────┬───────┐

│ species ┆ count │

│ --- ┆ --- │

│ str ┆ u32 │

╞═══════════╪═══════╡

│ Adelie ┆ 152 │

│ Gentoo ┆ 124 │

│ Chinstrap ┆ 68 │

└───────────┴───────┘The order of categorical values in axes, legends, and facets depends

on the order in which these values are first encountered in

data_frame. It’s often preferable to re-order the bars

based on their frequency, which we can do with the

category_orders argument. category_orders

takes a dictionary where the keys correspond to column names, and the

values should be lists of strings corresponding to the specific display

order desired

px.bar(

penguins_count,

x="species",

y="count",

category_orders={"species": ["Adelie", "Gentoo", "Chinstrap"]}

)While it’s easy enough to manually sort three columns, this could become very tedious for more columns. Here is one programmatic way you could sort the columns:

penguins_sorted = (

penguins_count

.sort(by="count", descending=True)

.get_column("species")

)

print(penguins_sorted)

px.bar(

penguins_count,

x="species",

y="count",

category_orders={"species": penguins_sorted}

)shape: (3,)

Series: 'species' [str]

[

"Adelie"

"Gentoo"

"Chinstrap"

]We will dive into the data manipulation code later, this is just to show what’s possible.

2.5.2 A numerical variable

A variable is numerical (or quantitative) if it can take on a wide range of numerical values, and it is sensible to add, subtract, or take averages with those values. Numerical variables can be continuous or discrete.

One common visualization for distributions of continuous variables is a histogram.

px.histogram(penguins, x="body_mass_g")A histogram divides the x-axis into equally spaced bins and then uses

the heigh of the bar to display the number observations that fall in

each bin. In the graph above, the tallest bar shows that 39 observations

have a body_mass_g value between 3,500 and 3,700 grams,

which are the left and right edges of the bar.

When working with histograms, it’s a good idea to use different number of bins to reveal different patterns in the data. In the plots below, X bars is too many, resulting in narrow bars. Similarly, 3 bins is too few, resulting in all the data being binned into huge categories that make it difficult to determine the shape of the distribution. A bin number of 20 provides a sensible balance.

px.histogram(penguins, x="body_mass_g", nbins=200)

px.histogram(penguins, x="body_mass_g", nbins=3)

px.histogram(penguins, x="body_mass_g", nbins=20)An alternative visualization for distributions of numerical variables is a density plot. A density plot is a smoothed-out version of a histogram and a practical alternative, particularly for continuous data that comes from an underlying smooth distribution. At the time of writing, Plotly Express doesn’t have a quick way to create a density plot, but it does offer very customizable violin plots, which we can make to look like a density plot if we would like.

px.violin(penguins, x="body_mass_g")The density plot is similar to the violin plot, with only one side, and the peaks are more exaggerated:

px.violin(

penguins,

x="body_mass_g", # plots the variable across the x-axis

range_y=[

0, # limits the bottom of y-axis, removing reflection

0.25 # limits the top of y-axis, stretching the peaks

]

)While this workaround works, sticking to the original violin plot I think looks better, and we can add some extra arguments to see more details:

px.violin(penguins, x="body_mass_g", points="all")Play around with different arguments and see what you like best!

As an analogy to understand these plots vs a histogram, imagine a histogram made out of wooden blocks. Then, imagine that you drop a cooked spaghetti string over it. The shape the spaghetti will take draped over blocks can be thought of as the shape of the density curve. It shows fewer details than a histogram but can make it easier to quickly glean the shape of the distribution, particularly with respect to modes and skewness.

2.6 Visualizing relationships

To visualize a relationship we need to have at least two variables mapped to aesthetics of a plot. In the following sections you will learn about commonly used plots for visualizing relationships between two or more variables and the plots used for creating them.

2.6.1 A numerical and a categorical variable

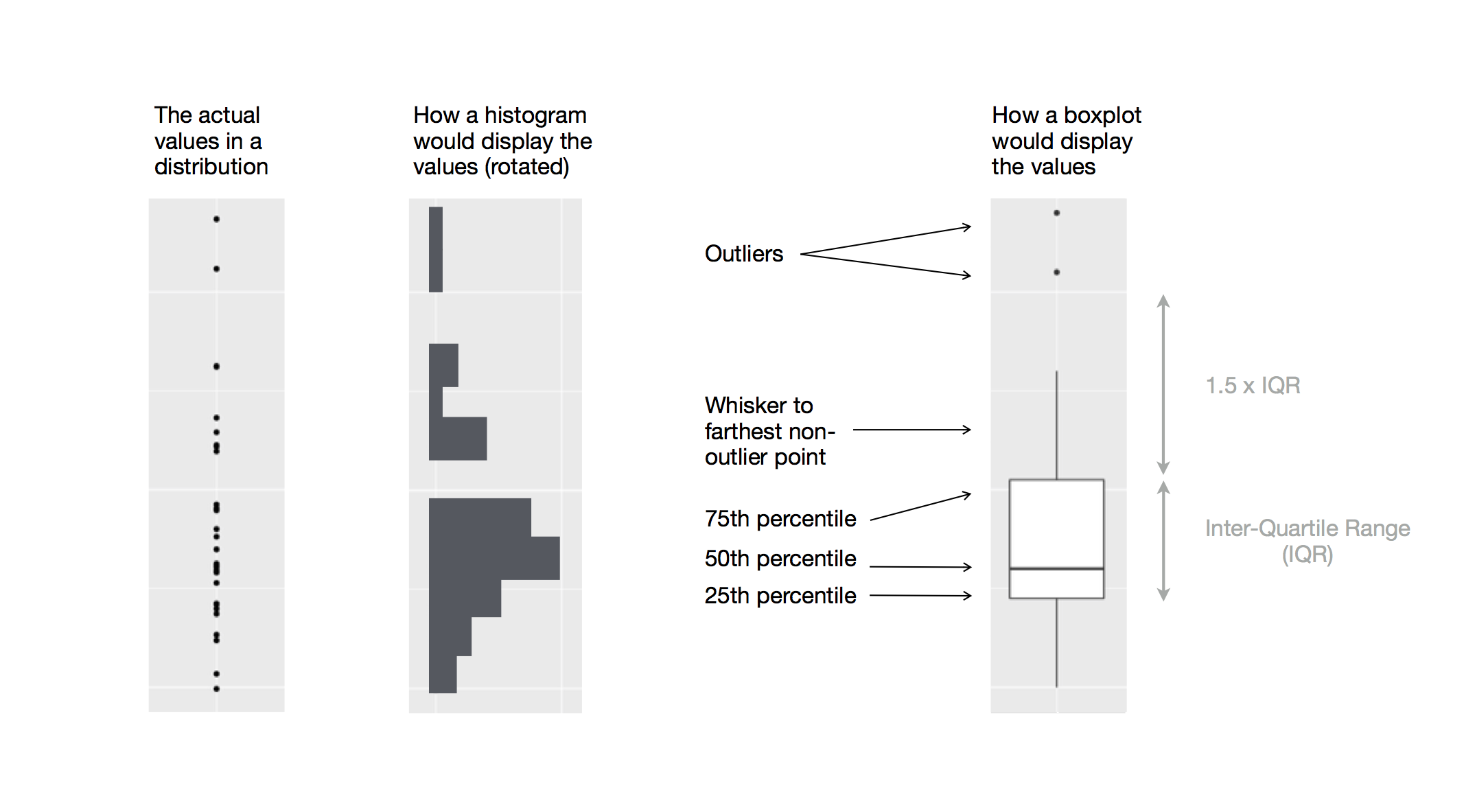

To visualize the relationship between a numerical and a categorical variable we can use side-by-side box plots. A boxplot is a type of visual shorthand for measures of position (percentiles) that describe a distribution. It is also useful for identifying potential outliers. Each boxplot consists of:

A box that indicates the range of the middle half of the data, a distance known as the inter-quartile range (IQR), stretching from the 25th percentile of the distribution to the 75th percentile. In the middle of the box is a line that displays the median, i.e. 50th percentile, of the distribution. These three lines give you a sense of the spread of the distribution and whether or not the distribution is symmetric about the median or skewed to one side.

Visual points that display observations that fall more than 1.5 times the IQR from either edge of the box. These outlying points are unusual so are plotted individually.

A line (or whisker) that extends from each end of the box and goes to the farthest non-outlier point in the distribution.

Let’s take a look at the distribution of body mass by species using

px.box()

px.box(penguins, x="species", y="body_mass_g")Alternatively, we can make violin plots with multiple groups:

px.violin(penguins, x="body_mass_g", color="species", box=True)As we’ve seen before, there are many ways to see and code what we are looking for.

2.6.2 Two categorical variables

We can use stacked bar plots to visualize the relationship between

two categorical variables. For example, the following two stacked bar

plots both display the relationship between island and

species, or specifically, visualizing the distribution of

species within each island.

The first plot shows the frequencies of each species of penguins on each island. The plot of frequencies shows that there are equal numbers of Adelies on each island. But we don’t have a good sense of the percentage balance within each island.

px.bar(penguins, x="island", color="species")or

data = penguins.group_by(["island", "species"]).len("count")

data_order = data.group_by("island").agg(pl.col("count").sum()).sort(by="count", descending=True).get_column("island")

px.bar(

data, x="island", y="count", color="species",

category_orders = {"island": data_order, "species": data_order}

)2.6.3 Two numerical variables

So far we’ve seen scatter plots for visualizing the relationship between two numerical variables. A scatter plot is probably the most commonly used plot for visualizing the relationship between to numerical variables.

px.scatter(penguins, x="flipper_length_mm", y="body_mass_g")2.6.4 Three or more variables

As we saw before, we can incorporate more variables into a plot by mapping them to additional aesthetics. For example, in the following plot, the colors of points represent species and the shapes represent islands.

px.scatter(

penguins,

x="flipper_length_mm", y="body_mass_g",

color="species", symbol="island"

)However adding too many aesthetic mappings to a plot makes it cluttered and difficult to make sense of. Another way, which is particularly useful for categorical variables, is to split your plot into facets, subplots that each display one subset of the data.

Most Plotly Express functions provide arguments to facet, just make sure to check the documentation.

px.scatter(

penguins,

x="flipper_length_mm", y="body_mass_g",

color="species", symbol="island",

facet_col="island"

)2.7 Summary

In this section, you’ve learned the basics of data visualization with Plotly Express. We started with the basic idea that underpins Plotly Express: a visualization is a mapping from variables in your data to aesthetic properties like position, color, size and shape. You then learned about increasing the complexity with more arguments in the Plotly functions. You also learned about commonly used plots for visualizing the distribution of a single variable as well as for visualizing relationships between two or more variables, by leveraging additional aesthetic mappings and/or splitting your plot into small multiples using faceting.

3 Data transformation

Visualization is an important tool for generating insight, but it’s rare that you get the data in exactly the right form you need to make the graph you want. Often you’ll need to create some new variables or summaries to answer your questions with your data, or maybe you just want to rename the variables or reorder the observations to make the data a little easier to work with. We’ve already seen examples of this above when creating the bar charts. In this section, we’ll see how to do that with the Polars package.

The goal of this section is to give you an overview of all the key tools for transforming a data frame. We’ll start with functions that operate on rows and then columns of a data frame, then circle back to talk more about method chaining, an important tool that you use to combine functions. We will then introduce the ability to work with groups. We will end the section with a case study that showcases these functions in action.

3.1 The

flights data frame

To explore basic Polars methods and expressions, we will use the

flights data frame from the nycflights13

package. This dataset contains all 336,776 flights that departed from

New York City in 2013.

flights = (

pl.from_pandas(nycflights13.flights)

.with_columns(pl.col("time_hour").str.to_datetime("%FT%TZ"))

) # We will learn what's going on here in later this section

flights| year | month | day | dep_time | sched_dep_time | dep_delay | arr_time | sched_arr_time | arr_delay | carrier | flight | tailnum | origin | dest | air_time | distance | hour | minute | time_hour |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| i64 | i64 | i64 | f64 | i64 | f64 | f64 | i64 | f64 | str | i64 | str | str | str | f64 | i64 | i64 | i64 | datetime[μs] |

| 2013 | 1 | 1 | 517.0 | 515 | 2.0 | 830.0 | 819 | 11.0 | "UA" | 1545 | "N14228" | "EWR" | "IAH" | 227.0 | 1400 | 5 | 15 | 2013-01-01 10:00:00 |

| 2013 | 1 | 1 | 533.0 | 529 | 4.0 | 850.0 | 830 | 20.0 | "UA" | 1714 | "N24211" | "LGA" | "IAH" | 227.0 | 1416 | 5 | 29 | 2013-01-01 10:00:00 |

| 2013 | 1 | 1 | 542.0 | 540 | 2.0 | 923.0 | 850 | 33.0 | "AA" | 1141 | "N619AA" | "JFK" | "MIA" | 160.0 | 1089 | 5 | 40 | 2013-01-01 10:00:00 |

| 2013 | 1 | 1 | 544.0 | 545 | -1.0 | 1004.0 | 1022 | -18.0 | "B6" | 725 | "N804JB" | "JFK" | "BQN" | 183.0 | 1576 | 5 | 45 | 2013-01-01 10:00:00 |

| … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … |

| 2013 | 9 | 30 | null | 2200 | null | null | 2312 | null | "9E" | 3525 | null | "LGA" | "SYR" | null | 198 | 22 | 0 | 2013-10-01 02:00:00 |

| 2013 | 9 | 30 | null | 1210 | null | null | 1330 | null | "MQ" | 3461 | "N535MQ" | "LGA" | "BNA" | null | 764 | 12 | 10 | 2013-09-30 16:00:00 |

| 2013 | 9 | 30 | null | 1159 | null | null | 1344 | null | "MQ" | 3572 | "N511MQ" | "LGA" | "CLE" | null | 419 | 11 | 59 | 2013-09-30 15:00:00 |

| 2013 | 9 | 30 | null | 840 | null | null | 1020 | null | "MQ" | 3531 | "N839MQ" | "LGA" | "RDU" | null | 431 | 8 | 40 | 2013-09-30 12:00:00 |

flights is a Polars DataFrame.

Different packages have their own version of a data frame with their own

methods, functions, etc., but in this course, we will be using Polars.

Polars provides its own way of working with data frames, as well as

importing, exporting, printing, and much more, including the previously

shown DataFrame.glimpse() method:

flights.glimpse()Rows: 336776

Columns: 19

$ year <i64> 2013, 2013, 2013, 2013, 2013, 2013, 2013, 2013, 2013, 2013

$ month <i64> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1

$ day <i64> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1

$ dep_time <f64> 517.0, 533.0, 542.0, 544.0, 554.0, 554.0, 555.0, 557.0, 557.0, 558.0

$ sched_dep_time <i64> 515, 529, 540, 545, 600, 558, 600, 600, 600, 600

$ dep_delay <f64> 2.0, 4.0, 2.0, -1.0, -6.0, -4.0, -5.0, -3.0, -3.0, -2.0

$ arr_time <f64> 830.0, 850.0, 923.0, 1004.0, 812.0, 740.0, 913.0, 709.0, 838.0, 753.0

$ sched_arr_time <i64> 819, 830, 850, 1022, 837, 728, 854, 723, 846, 745

$ arr_delay <f64> 11.0, 20.0, 33.0, -18.0, -25.0, 12.0, 19.0, -14.0, -8.0, 8.0

$ carrier <str> 'UA', 'UA', 'AA', 'B6', 'DL', 'UA', 'B6', 'EV', 'B6', 'AA'

$ flight <i64> 1545, 1714, 1141, 725, 461, 1696, 507, 5708, 79, 301

$ tailnum <str> 'N14228', 'N24211', 'N619AA', 'N804JB', 'N668DN', 'N39463', 'N516JB', 'N829AS', 'N593JB', 'N3ALAA'

$ origin <str> 'EWR', 'LGA', 'JFK', 'JFK', 'LGA', 'EWR', 'EWR', 'LGA', 'JFK', 'LGA'

$ dest <str> 'IAH', 'IAH', 'MIA', 'BQN', 'ATL', 'ORD', 'FLL', 'IAD', 'MCO', 'ORD'

$ air_time <f64> 227.0, 227.0, 160.0, 183.0, 116.0, 150.0, 158.0, 53.0, 140.0, 138.0

$ distance <i64> 1400, 1416, 1089, 1576, 762, 719, 1065, 229, 944, 733

$ hour <i64> 5, 5, 5, 5, 6, 5, 6, 6, 6, 6

$ minute <i64> 15, 29, 40, 45, 0, 58, 0, 0, 0, 0

$ time_hour <datetime[μs]> 2013-01-01 10:00:00, 2013-01-01 10:00:00, 2013-01-01 10:00:00, 2013-01-01 10:00:00, 2013-01-01 11:00:00, 2013-01-01 10:00:00, 2013-01-01 11:00:00, 2013-01-01 11:00:00, 2013-01-01 11:00:00, 2013-01-01 11:00:00

In both views, the variable names are followed by abbreviations that

tell you the type of each variable: i64 is short for

integer, f64 is short for float, str is short

for string, and datetime[μs] for date-time (in this case,

down to the micro-seconds).

We’re going to learn the primary methods (or contexts as Polars calls them) which will allow yo uto solve the vast majority of your data manipulation challenges. Before we discuss their individual differences, it’s worth stating what they have in common:

- The methods are always attached (or chained) to a data frame.

- The arguments typically describe which columns to operate on.

- The output is a new data frame (for the most part, ex:

group_by).

Because each method does one thing well, solving complex problems

will usually require combining multiple methods, and we will do so with

something called “method chaining”. You’ve already seen this before,

this is when we attach multiple methods together without creating a

placeholder variable between steps. You can think of each .

operator of saying “then”. This should help you get a sense of the

following code without understanding the details:

flights.filter(

pl.col("dest") == "IAH"

).group_by(

["year", "month", "day"]

).agg( # "aggregate", or summarize

arr_delay=pl.col("arr_delay").mean()

)| year | month | day | arr_delay |

|---|---|---|---|

| i64 | i64 | i64 | f64 |

| 2013 | 9 | 8 | -21.5 |

| 2013 | 5 | 27 | -25.095238 |

| 2013 | 11 | 29 | -25.384615 |

| 2013 | 7 | 17 | 14.7 |

| … | … | … | … |

| 2013 | 9 | 19 | 2.0 |

| 2013 | 5 | 1 | -2.473684 |

| 2013 | 4 | 9 | -1.526316 |

| 2013 | 3 | 3 | -36.65 |

We can also write the previous code in a cleaner format. When

surrounded by parenthesis, the . operator does not have to

“touch” the closing method before it:

(

flights

.filter(pl.col("dest") == "IAH")

.group_by(["year", "month", "day"])

.agg(arr_delay=pl.col("arr_delay").mean())

)If we didn’t use method chaining, we would have to create a bunch of intermediate objects:

flights1 = flights.filter(pl.col("dest") == "IAH")

flights2 = flights1.group_by(["year", "month", "day"])

flights3 = flights2.agg(arr_delay=pl.col("arr_delay").mean())While all of these have their time and place, method chaining generally produces data analysis code that is easier to write and read.

We can organize these contexts (methods) based on what they operate on: rows, columns, groups, or tables.

3.2 Rows

The most important contexts that operate on rows of a dataset are

DataFrame.filter(), which changes which rows are present

without changing their order, and DataFrame.sort(), which

changes the order of the rows without changing which are present. Both

methods only affect the rows, and the columns are left unchanged. We’ll

also see DataFrame.unique() which returns rows with unique

values. Unlike DataFrame.sort() and

DataFrame.filter(), it can also optionally modify the

columns.

3.2.1

DataFrame.filter()

DataFrame.filter()

allows you to keep rows based on the values of the columns. The

arguments (also known as predicates) are the conditions that must be

true to keep the row. For example, we could find all flights that

departed more than 120 minutes late:

flights.filter(pl.col("dep_delay") > 120)| year | month | day | dep_time | sched_dep_time | dep_delay | arr_time | sched_arr_time | arr_delay | carrier | flight | tailnum | origin | dest | air_time | distance | hour | minute | time_hour |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| i64 | i64 | i64 | f64 | i64 | f64 | f64 | i64 | f64 | str | i64 | str | str | str | f64 | i64 | i64 | i64 | datetime[μs] |

| 2013 | 1 | 1 | 848.0 | 1835 | 853.0 | 1001.0 | 1950 | 851.0 | "MQ" | 3944 | "N942MQ" | "JFK" | "BWI" | 41.0 | 184 | 18 | 35 | 2013-01-01 23:00:00 |

| 2013 | 1 | 1 | 957.0 | 733 | 144.0 | 1056.0 | 853 | 123.0 | "UA" | 856 | "N534UA" | "EWR" | "BOS" | 37.0 | 200 | 7 | 33 | 2013-01-01 12:00:00 |

| 2013 | 1 | 1 | 1114.0 | 900 | 134.0 | 1447.0 | 1222 | 145.0 | "UA" | 1086 | "N76502" | "LGA" | "IAH" | 248.0 | 1416 | 9 | 0 | 2013-01-01 14:00:00 |

| 2013 | 1 | 1 | 1540.0 | 1338 | 122.0 | 2020.0 | 1825 | 115.0 | "B6" | 705 | "N570JB" | "JFK" | "SJU" | 193.0 | 1598 | 13 | 38 | 2013-01-01 18:00:00 |

| … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … |

| 2013 | 9 | 30 | 1951.0 | 1649 | 182.0 | 2157.0 | 1903 | 174.0 | "EV" | 4294 | "N13988" | "EWR" | "SAV" | 95.0 | 708 | 16 | 49 | 2013-09-30 20:00:00 |

| 2013 | 9 | 30 | 2053.0 | 1815 | 158.0 | 2310.0 | 2054 | 136.0 | "EV" | 5292 | "N600QX" | "EWR" | "ATL" | 91.0 | 746 | 18 | 15 | 2013-09-30 22:00:00 |

| 2013 | 9 | 30 | 2159.0 | 1845 | 194.0 | 2344.0 | 2030 | 194.0 | "9E" | 3320 | "N906XJ" | "JFK" | "BUF" | 50.0 | 301 | 18 | 45 | 2013-09-30 22:00:00 |

| 2013 | 9 | 30 | 2235.0 | 2001 | 154.0 | 59.0 | 2249 | 130.0 | "B6" | 1083 | "N804JB" | "JFK" | "MCO" | 123.0 | 944 | 20 | 1 | 2013-10-01 00:00:00 |

We can use all of the same boolean expressions we’ve learned

previous, as well as chain them with & (instead of

and), | (instead of or), and

~ (instead of not). Note that Polars is picky

about ambiguity, so each condition we check for also has it’s own

parenthesis, similar to what we might use in a calculator to make sure

the order of operations is being followed exactly as we want:

# Flights that departed on January 1

flights.filter(

(pl.col("month") == 1) & (pl.col("day") == 1)

)| year | month | day | dep_time | sched_dep_time | dep_delay | arr_time | sched_arr_time | arr_delay | carrier | flight | tailnum | origin | dest | air_time | distance | hour | minute | time_hour |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| i64 | i64 | i64 | f64 | i64 | f64 | f64 | i64 | f64 | str | i64 | str | str | str | f64 | i64 | i64 | i64 | datetime[μs] |

| 2013 | 1 | 1 | 517.0 | 515 | 2.0 | 830.0 | 819 | 11.0 | "UA" | 1545 | "N14228" | "EWR" | "IAH" | 227.0 | 1400 | 5 | 15 | 2013-01-01 10:00:00 |

| 2013 | 1 | 1 | 533.0 | 529 | 4.0 | 850.0 | 830 | 20.0 | "UA" | 1714 | "N24211" | "LGA" | "IAH" | 227.0 | 1416 | 5 | 29 | 2013-01-01 10:00:00 |

| 2013 | 1 | 1 | 542.0 | 540 | 2.0 | 923.0 | 850 | 33.0 | "AA" | 1141 | "N619AA" | "JFK" | "MIA" | 160.0 | 1089 | 5 | 40 | 2013-01-01 10:00:00 |

| 2013 | 1 | 1 | 544.0 | 545 | -1.0 | 1004.0 | 1022 | -18.0 | "B6" | 725 | "N804JB" | "JFK" | "BQN" | 183.0 | 1576 | 5 | 45 | 2013-01-01 10:00:00 |

| … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … |

| 2013 | 1 | 1 | null | 1630 | null | null | 1815 | null | "EV" | 4308 | "N18120" | "EWR" | "RDU" | null | 416 | 16 | 30 | 2013-01-01 21:00:00 |

| 2013 | 1 | 1 | null | 1935 | null | null | 2240 | null | "AA" | 791 | "N3EHAA" | "LGA" | "DFW" | null | 1389 | 19 | 35 | 2013-01-02 00:00:00 |

| 2013 | 1 | 1 | null | 1500 | null | null | 1825 | null | "AA" | 1925 | "N3EVAA" | "LGA" | "MIA" | null | 1096 | 15 | 0 | 2013-01-01 20:00:00 |

| 2013 | 1 | 1 | null | 600 | null | null | 901 | null | "B6" | 125 | "N618JB" | "JFK" | "FLL" | null | 1069 | 6 | 0 | 2013-01-01 11:00:00 |

# Flights that departed in January or February

flights.filter(

(pl.col("month") == 1) | (pl.col("month") == 2)

)| year | month | day | dep_time | sched_dep_time | dep_delay | arr_time | sched_arr_time | arr_delay | carrier | flight | tailnum | origin | dest | air_time | distance | hour | minute | time_hour |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| i64 | i64 | i64 | f64 | i64 | f64 | f64 | i64 | f64 | str | i64 | str | str | str | f64 | i64 | i64 | i64 | datetime[μs] |

| 2013 | 1 | 1 | 517.0 | 515 | 2.0 | 830.0 | 819 | 11.0 | "UA" | 1545 | "N14228" | "EWR" | "IAH" | 227.0 | 1400 | 5 | 15 | 2013-01-01 10:00:00 |

| 2013 | 1 | 1 | 533.0 | 529 | 4.0 | 850.0 | 830 | 20.0 | "UA" | 1714 | "N24211" | "LGA" | "IAH" | 227.0 | 1416 | 5 | 29 | 2013-01-01 10:00:00 |

| 2013 | 1 | 1 | 542.0 | 540 | 2.0 | 923.0 | 850 | 33.0 | "AA" | 1141 | "N619AA" | "JFK" | "MIA" | 160.0 | 1089 | 5 | 40 | 2013-01-01 10:00:00 |

| 2013 | 1 | 1 | 544.0 | 545 | -1.0 | 1004.0 | 1022 | -18.0 | "B6" | 725 | "N804JB" | "JFK" | "BQN" | 183.0 | 1576 | 5 | 45 | 2013-01-01 10:00:00 |

| … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … |

| 2013 | 2 | 28 | null | 905 | null | null | 1115 | null | "MQ" | 4478 | "N722MQ" | "LGA" | "DTW" | null | 502 | 9 | 5 | 2013-02-28 14:00:00 |

| 2013 | 2 | 28 | null | 1115 | null | null | 1310 | null | "MQ" | 4485 | "N725MQ" | "LGA" | "CMH" | null | 479 | 11 | 15 | 2013-02-28 16:00:00 |

| 2013 | 2 | 28 | null | 830 | null | null | 1205 | null | "UA" | 1480 | null | "EWR" | "SFO" | null | 2565 | 8 | 30 | 2013-02-28 13:00:00 |

| 2013 | 2 | 28 | null | 840 | null | null | 1147 | null | "UA" | 443 | null | "JFK" | "LAX" | null | 2475 | 8 | 40 | 2013-02-28 13:00:00 |

There’s a useful shortcut when you’re combining | and

==: Expr.is_in(). It keeps rows where the

variable equals one of the values on the right:

# A shorter way to select flights that departed in January or February

flights.filter(

pl.col("month").is_in([1, 2])

)| year | month | day | dep_time | sched_dep_time | dep_delay | arr_time | sched_arr_time | arr_delay | carrier | flight | tailnum | origin | dest | air_time | distance | hour | minute | time_hour |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| i64 | i64 | i64 | f64 | i64 | f64 | f64 | i64 | f64 | str | i64 | str | str | str | f64 | i64 | i64 | i64 | datetime[μs] |

| 2013 | 1 | 1 | 517.0 | 515 | 2.0 | 830.0 | 819 | 11.0 | "UA" | 1545 | "N14228" | "EWR" | "IAH" | 227.0 | 1400 | 5 | 15 | 2013-01-01 10:00:00 |

| 2013 | 1 | 1 | 533.0 | 529 | 4.0 | 850.0 | 830 | 20.0 | "UA" | 1714 | "N24211" | "LGA" | "IAH" | 227.0 | 1416 | 5 | 29 | 2013-01-01 10:00:00 |

| 2013 | 1 | 1 | 542.0 | 540 | 2.0 | 923.0 | 850 | 33.0 | "AA" | 1141 | "N619AA" | "JFK" | "MIA" | 160.0 | 1089 | 5 | 40 | 2013-01-01 10:00:00 |

| 2013 | 1 | 1 | 544.0 | 545 | -1.0 | 1004.0 | 1022 | -18.0 | "B6" | 725 | "N804JB" | "JFK" | "BQN" | 183.0 | 1576 | 5 | 45 | 2013-01-01 10:00:00 |

| … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … |

| 2013 | 2 | 28 | null | 905 | null | null | 1115 | null | "MQ" | 4478 | "N722MQ" | "LGA" | "DTW" | null | 502 | 9 | 5 | 2013-02-28 14:00:00 |

| 2013 | 2 | 28 | null | 1115 | null | null | 1310 | null | "MQ" | 4485 | "N725MQ" | "LGA" | "CMH" | null | 479 | 11 | 15 | 2013-02-28 16:00:00 |

| 2013 | 2 | 28 | null | 830 | null | null | 1205 | null | "UA" | 1480 | null | "EWR" | "SFO" | null | 2565 | 8 | 30 | 2013-02-28 13:00:00 |

| 2013 | 2 | 28 | null | 840 | null | null | 1147 | null | "UA" | 443 | null | "JFK" | "LAX" | null | 2475 | 8 | 40 | 2013-02-28 13:00:00 |

When you run DataFrame.filter(), Polars executes the

filtering operation, creating a new DataFrame, and then prints it. It

doesn’t modify the existing flights dataset because Polars

never modifies the input (unless when explicitly chosen). To save the

result, you need to use the assignment operator, =:

jan1 = flights.filter((pl.col("month") == 1) & (pl.col("day") == 1))

jan1| year | month | day | dep_time | sched_dep_time | dep_delay | arr_time | sched_arr_time | arr_delay | carrier | flight | tailnum | origin | dest | air_time | distance | hour | minute | time_hour |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| i64 | i64 | i64 | f64 | i64 | f64 | f64 | i64 | f64 | str | i64 | str | str | str | f64 | i64 | i64 | i64 | datetime[μs] |

| 2013 | 1 | 1 | 517.0 | 515 | 2.0 | 830.0 | 819 | 11.0 | "UA" | 1545 | "N14228" | "EWR" | "IAH" | 227.0 | 1400 | 5 | 15 | 2013-01-01 10:00:00 |

| 2013 | 1 | 1 | 533.0 | 529 | 4.0 | 850.0 | 830 | 20.0 | "UA" | 1714 | "N24211" | "LGA" | "IAH" | 227.0 | 1416 | 5 | 29 | 2013-01-01 10:00:00 |

| 2013 | 1 | 1 | 542.0 | 540 | 2.0 | 923.0 | 850 | 33.0 | "AA" | 1141 | "N619AA" | "JFK" | "MIA" | 160.0 | 1089 | 5 | 40 | 2013-01-01 10:00:00 |

| 2013 | 1 | 1 | 544.0 | 545 | -1.0 | 1004.0 | 1022 | -18.0 | "B6" | 725 | "N804JB" | "JFK" | "BQN" | 183.0 | 1576 | 5 | 45 | 2013-01-01 10:00:00 |

| … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … |

| 2013 | 1 | 1 | null | 1630 | null | null | 1815 | null | "EV" | 4308 | "N18120" | "EWR" | "RDU" | null | 416 | 16 | 30 | 2013-01-01 21:00:00 |

| 2013 | 1 | 1 | null | 1935 | null | null | 2240 | null | "AA" | 791 | "N3EHAA" | "LGA" | "DFW" | null | 1389 | 19 | 35 | 2013-01-02 00:00:00 |

| 2013 | 1 | 1 | null | 1500 | null | null | 1825 | null | "AA" | 1925 | "N3EVAA" | "LGA" | "MIA" | null | 1096 | 15 | 0 | 2013-01-01 20:00:00 |

| 2013 | 1 | 1 | null | 600 | null | null | 901 | null | "B6" | 125 | "N618JB" | "JFK" | "FLL" | null | 1069 | 6 | 0 | 2013-01-01 11:00:00 |

3.2.2

DataFrame.sort()

DataFrame.sort()

changes the order of the rows based on the value of the columns. It

takes a data frame and a set of column names (or more complicated

expressions) to order by. If you provide more than one column name, each

additional column will be used to break ties in the values of the

preceding columns. For example, the following code sorts by the

departure time, which is spread over four columns. We get the earliest

years first, then within a year, the earliest months, etc.

flights.sort(by=["year", "month", "day", "dep_time"])| year | month | day | dep_time | sched_dep_time | dep_delay | arr_time | sched_arr_time | arr_delay | carrier | flight | tailnum | origin | dest | air_time | distance | hour | minute | time_hour |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| i64 | i64 | i64 | f64 | i64 | f64 | f64 | i64 | f64 | str | i64 | str | str | str | f64 | i64 | i64 | i64 | datetime[μs] |

| 2013 | 1 | 1 | null | 1935 | null | null | 2240 | null | "AA" | 791 | "N3EHAA" | "LGA" | "DFW" | null | 1389 | 19 | 35 | 2013-01-02 00:00:00 |

| 2013 | 1 | 1 | null | 1500 | null | null | 1825 | null | "AA" | 1925 | "N3EVAA" | "LGA" | "MIA" | null | 1096 | 15 | 0 | 2013-01-01 20:00:00 |

| 2013 | 1 | 1 | null | 1630 | null | null | 1815 | null | "EV" | 4308 | "N18120" | "EWR" | "RDU" | null | 416 | 16 | 30 | 2013-01-01 21:00:00 |

| 2013 | 1 | 1 | null | 600 | null | null | 901 | null | "B6" | 125 | "N618JB" | "JFK" | "FLL" | null | 1069 | 6 | 0 | 2013-01-01 11:00:00 |

| … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … |

| 2013 | 12 | 31 | 2328.0 | 2330 | -2.0 | 412.0 | 409 | 3.0 | "B6" | 1389 | "N651JB" | "EWR" | "SJU" | 198.0 | 1608 | 23 | 30 | 2014-01-01 04:00:00 |

| 2013 | 12 | 31 | 2332.0 | 2245 | 47.0 | 58.0 | 3 | 55.0 | "B6" | 486 | "N334JB" | "JFK" | "ROC" | 60.0 | 264 | 22 | 45 | 2014-01-01 03:00:00 |

| 2013 | 12 | 31 | 2355.0 | 2359 | -4.0 | 430.0 | 440 | -10.0 | "B6" | 1503 | "N509JB" | "JFK" | "SJU" | 195.0 | 1598 | 23 | 59 | 2014-01-01 04:00:00 |

| 2013 | 12 | 31 | 2356.0 | 2359 | -3.0 | 436.0 | 445 | -9.0 | "B6" | 745 | "N665JB" | "JFK" | "PSE" | 200.0 | 1617 | 23 | 59 | 2014-01-01 04:00:00 |

You can use positional arguments to sort by multiple columns in the same way:

flights.sort(

by=["year", "month", "day", "dep_time"],

descending=[False, False, False, True]

)| year | month | day | dep_time | sched_dep_time | dep_delay | arr_time | sched_arr_time | arr_delay | carrier | flight | tailnum | origin | dest | air_time | distance | hour | minute | time_hour |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| i64 | i64 | i64 | f64 | i64 | f64 | f64 | i64 | f64 | str | i64 | str | str | str | f64 | i64 | i64 | i64 | datetime[μs] |

| 2013 | 1 | 1 | null | 600 | null | null | 901 | null | "B6" | 125 | "N618JB" | "JFK" | "FLL" | null | 1069 | 6 | 0 | 2013-01-01 11:00:00 |

| 2013 | 1 | 1 | null | 1500 | null | null | 1825 | null | "AA" | 1925 | "N3EVAA" | "LGA" | "MIA" | null | 1096 | 15 | 0 | 2013-01-01 20:00:00 |

| 2013 | 1 | 1 | null | 1935 | null | null | 2240 | null | "AA" | 791 | "N3EHAA" | "LGA" | "DFW" | null | 1389 | 19 | 35 | 2013-01-02 00:00:00 |

| 2013 | 1 | 1 | null | 1630 | null | null | 1815 | null | "EV" | 4308 | "N18120" | "EWR" | "RDU" | null | 416 | 16 | 30 | 2013-01-01 21:00:00 |

| … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … |

| 2013 | 12 | 31 | 459.0 | 500 | -1.0 | 655.0 | 651 | 4.0 | "US" | 1895 | "N557UW" | "EWR" | "CLT" | 95.0 | 529 | 5 | 0 | 2013-12-31 10:00:00 |

| 2013 | 12 | 31 | 26.0 | 2245 | 101.0 | 129.0 | 2353 | 96.0 | "B6" | 108 | "N374JB" | "JFK" | "PWM" | 50.0 | 273 | 22 | 45 | 2014-01-01 03:00:00 |

| 2013 | 12 | 31 | 18.0 | 2359 | 19.0 | 449.0 | 444 | 5.0 | "DL" | 412 | "N713TW" | "JFK" | "SJU" | 192.0 | 1598 | 23 | 59 | 2014-01-01 04:00:00 |

| 2013 | 12 | 31 | 13.0 | 2359 | 14.0 | 439.0 | 437 | 2.0 | "B6" | 839 | "N566JB" | "JFK" | "BQN" | 189.0 | 1576 | 23 | 59 | 2014-01-01 04:00:00 |

Note that the number of rows has not changed, we’re only arranging the data, we’re not filtering it.

3.2.3

DataFrame.unique()

DataFrame.unique()

finds all the unique rows in a dataset, so technically, it primarily

operates on the rows. Most of the time, however, you’ll want the

distinct combination of some variables, so you can also optionally

supply column names:

# Remove duplicate rows, if any

flights.unique()| year | month | day | dep_time | sched_dep_time | dep_delay | arr_time | sched_arr_time | arr_delay | carrier | flight | tailnum | origin | dest | air_time | distance | hour | minute | time_hour |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| i64 | i64 | i64 | f64 | i64 | f64 | f64 | i64 | f64 | str | i64 | str | str | str | f64 | i64 | i64 | i64 | datetime[μs] |

| 2013 | 7 | 15 | 2107.0 | 2030 | 37.0 | 2213.0 | 2202 | 11.0 | "9E" | 4079 | "N8588D" | "JFK" | "BWI" | 37.0 | 184 | 20 | 30 | 2013-07-16 00:00:00 |

| 2013 | 2 | 12 | 1441.0 | 1445 | -4.0 | 1728.0 | 1744 | -16.0 | "B6" | 153 | "N558JB" | "JFK" | "MCO" | 146.0 | 944 | 14 | 45 | 2013-02-12 19:00:00 |

| 2013 | 6 | 16 | 809.0 | 815 | -6.0 | 1038.0 | 1033 | 5.0 | "DL" | 914 | "N351NW" | "LGA" | "DEN" | 234.0 | 1620 | 8 | 15 | 2013-06-16 12:00:00 |

| 2013 | 8 | 6 | 1344.0 | 1349 | -5.0 | 1506.0 | 1515 | -9.0 | "UA" | 643 | "N807UA" | "EWR" | "ORD" | 122.0 | 719 | 13 | 49 | 2013-08-06 17:00:00 |

| … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … |

| 2013 | 5 | 26 | 1146.0 | 1130 | 16.0 | 1428.0 | 1428 | 0.0 | "B6" | 61 | "N653JB" | "JFK" | "FLL" | 146.0 | 1069 | 11 | 30 | 2013-05-26 15:00:00 |

| 2013 | 12 | 1 | 1656.0 | 1700 | -4.0 | 1757.0 | 1816 | -19.0 | "B6" | 1734 | "N283JB" | "JFK" | "BTV" | 47.0 | 266 | 17 | 0 | 2013-12-01 22:00:00 |

| 2013 | 6 | 28 | 700.0 | 700 | 0.0 | 953.0 | 1006 | -13.0 | "DL" | 1415 | "N662DN" | "JFK" | "SLC" | 263.0 | 1990 | 7 | 0 | 2013-06-28 11:00:00 |

| 2013 | 7 | 24 | 1057.0 | 1100 | -3.0 | 1338.0 | 1349 | -11.0 | "DL" | 695 | "N928DL" | "JFK" | "MCO" | 135.0 | 944 | 11 | 0 | 2013-07-24 15:00:00 |

# Find all unique origin and destination pairs

flights.unique(subset=["origin", "dest"])| year | month | day | dep_time | sched_dep_time | dep_delay | arr_time | sched_arr_time | arr_delay | carrier | flight | tailnum | origin | dest | air_time | distance | hour | minute | time_hour |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| i64 | i64 | i64 | f64 | i64 | f64 | f64 | i64 | f64 | str | i64 | str | str | str | f64 | i64 | i64 | i64 | datetime[μs] |

| 2013 | 1 | 2 | 1552.0 | 1600 | -8.0 | 1727.0 | 1725 | 2.0 | "EV" | 4922 | "N371CA" | "LGA" | "ROC" | 47.0 | 254 | 16 | 0 | 2013-01-02 21:00:00 |

| 2013 | 1 | 1 | 1832.0 | 1835 | -3.0 | 2059.0 | 2103 | -4.0 | "9E" | 3830 | "N8894A" | "JFK" | "CHS" | 106.0 | 636 | 18 | 35 | 2013-01-01 23:00:00 |

| 2013 | 1 | 1 | 1558.0 | 1534 | 24.0 | 1808.0 | 1703 | 65.0 | "EV" | 4502 | "N16546" | "EWR" | "BNA" | 168.0 | 748 | 15 | 34 | 2013-01-01 20:00:00 |

| 2013 | 1 | 1 | 810.0 | 810 | 0.0 | 1048.0 | 1037 | 11.0 | "9E" | 3538 | "N915XJ" | "JFK" | "MSP" | 189.0 | 1029 | 8 | 10 | 2013-01-01 13:00:00 |

| … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … |

| 2013 | 1 | 1 | 805.0 | 805 | 0.0 | 1015.0 | 1005 | 10.0 | "B6" | 219 | "N273JB" | "JFK" | "CLT" | 98.0 | 541 | 8 | 5 | 2013-01-01 13:00:00 |

| 2013 | 1 | 1 | 1318.0 | 1322 | -4.0 | 1358.0 | 1416 | -18.0 | "EV" | 4106 | "N19554" | "EWR" | "BDL" | 25.0 | 116 | 13 | 22 | 2013-01-01 18:00:00 |

| 2013 | 1 | 2 | 905.0 | 822 | 43.0 | 1313.0 | 1045 | null | "EV" | 4140 | "N15912" | "EWR" | "XNA" | null | 1131 | 8 | 22 | 2013-01-02 13:00:00 |

| 2013 | 1 | 1 | 1840.0 | 1845 | -5.0 | 2055.0 | 2030 | 25.0 | "MQ" | 4517 | "N725MQ" | "LGA" | "CRW" | 96.0 | 444 | 18 | 45 | 2013-01-01 23:00:00 |

It should be noted that the default argument for keep is

any, which does not give any guarantee of which

unique rows are kept. If you dataset is ordered, you might want

to use one of the other options for keep.

If you want the number of occurrences instead, you’ll need to use a

combination of DataFrame.group_by along with a

GroupBy.agg().

3.3 Columns

There are two important contexts that affect the columns without

changing the rows: DataFrame.with_columns(), which creates

new columns that are derived from the existing columns, &

select(), which changes which columns are present.

3.3.1

DataFrame.with_columns()

The job of DataFrame.with_columns()

is to add new columns. In the Data Wrangling module, you will learn a

large set of functions that you can use to manipulate different types of

variables. For new, we’ll stick with basic algebra, which allows us to

compute the gain, how much time a delayed flight made up in

the air, and the speed in miles per hour:

flights.with_columns(

gain = pl.col("dep_delay") - pl.col("arr_delay"),

speed = pl.col("distance") / pl.col("air_time") * 60

)| year | month | day | dep_time | sched_dep_time | dep_delay | arr_time | sched_arr_time | arr_delay | carrier | flight | tailnum | origin | dest | air_time | distance | hour | minute | time_hour | gain | speed |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| i64 | i64 | i64 | f64 | i64 | f64 | f64 | i64 | f64 | str | i64 | str | str | str | f64 | i64 | i64 | i64 | datetime[μs] | f64 | f64 |

| 2013 | 1 | 1 | 517.0 | 515 | 2.0 | 830.0 | 819 | 11.0 | "UA" | 1545 | "N14228" | "EWR" | "IAH" | 227.0 | 1400 | 5 | 15 | 2013-01-01 10:00:00 | -9.0 | 370.044053 |

| 2013 | 1 | 1 | 533.0 | 529 | 4.0 | 850.0 | 830 | 20.0 | "UA" | 1714 | "N24211" | "LGA" | "IAH" | 227.0 | 1416 | 5 | 29 | 2013-01-01 10:00:00 | -16.0 | 374.273128 |

| 2013 | 1 | 1 | 542.0 | 540 | 2.0 | 923.0 | 850 | 33.0 | "AA" | 1141 | "N619AA" | "JFK" | "MIA" | 160.0 | 1089 | 5 | 40 | 2013-01-01 10:00:00 | -31.0 | 408.375 |

| 2013 | 1 | 1 | 544.0 | 545 | -1.0 | 1004.0 | 1022 | -18.0 | "B6" | 725 | "N804JB" | "JFK" | "BQN" | 183.0 | 1576 | 5 | 45 | 2013-01-01 10:00:00 | 17.0 | 516.721311 |

| … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … |

| 2013 | 9 | 30 | null | 2200 | null | null | 2312 | null | "9E" | 3525 | null | "LGA" | "SYR" | null | 198 | 22 | 0 | 2013-10-01 02:00:00 | null | null |

| 2013 | 9 | 30 | null | 1210 | null | null | 1330 | null | "MQ" | 3461 | "N535MQ" | "LGA" | "BNA" | null | 764 | 12 | 10 | 2013-09-30 16:00:00 | null | null |

| 2013 | 9 | 30 | null | 1159 | null | null | 1344 | null | "MQ" | 3572 | "N511MQ" | "LGA" | "CLE" | null | 419 | 11 | 59 | 2013-09-30 15:00:00 | null | null |

| 2013 | 9 | 30 | null | 840 | null | null | 1020 | null | "MQ" | 3531 | "N839MQ" | "LGA" | "RDU" | null | 431 | 8 | 40 | 2013-09-30 12:00:00 | null | null |

Note that since we haven’t assigned the result of the above

computation back to flights, the new variables

gain and speed will only be printed but will

not be stored in a data frame. And if we want them to be available in a

data frame for future use, we should think carefully about whether we

want the result to be assigned back to flights, overwriting

the original data frame with many more variables, or to a new object.

Often, the right answer is a new object that is named informatively to

indicate its contents, e.g., delay_gain, but you might also

have good reasons for overwriting flights.

3.3.2

DataFrame.select()

It’s not uncommon to get datasets with hundreds or even thousands of

variables. In this situation, the first challenge is often just focusing

on the variables you’re interested in. DataFrame.select()

allows you to rapidly zoom in on a useful subset using operations based

on the names of the variables:

- Select columns by name:

flights.select("year")| year |

|---|

| i64 |

| 2013 |

| 2013 |

| 2013 |

| 2013 |

| … |

| 2013 |

| 2013 |

| 2013 |

| 2013 |

- Select multiple columns by passing a list of column names:

flights.select(["year", "month", "day"])| year | month | day |

|---|---|---|

| i64 | i64 | i64 |

| 2013 | 1 | 1 |

| 2013 | 1 | 1 |

| 2013 | 1 | 1 |

| 2013 | 1 | 1 |

| … | … | … |

| 2013 | 9 | 30 |

| 2013 | 9 | 30 |

| 2013 | 9 | 30 |

| 2013 | 9 | 30 |

- Multiple columns can also be selected using positional arguments instead of a list. Expressions are also accepted:

flights.select(

pl.col("year"),

pl.col("month"),

month_add_one = pl.col("month") + 1 # Adds 1 to the values of "month"

)| year | month | month_add_one |

|---|---|---|

| i64 | i64 | i64 |

| 2013 | 1 | 2 |

| 2013 | 1 | 2 |

| 2013 | 1 | 2 |

| 2013 | 1 | 2 |

| … | … | … |

| 2013 | 9 | 10 |

| 2013 | 9 | 10 |

| 2013 | 9 | 10 |

| 2013 | 9 | 10 |

Polars also provides more advanced way to select columns using its Selectors.

Selectors allow for more intuitive selection of columns from DataFrame

objects based on their name, type, or other properties. They unify and

build on the related functionality that is available through the

pl.col() expression and can also broadcast expressions over

the selected columns.

Selectors are available as functions imported from

polars.selectors. Typical/recommended usage is to import

the module as cs and employ selectors from there.

import polars as pl

import polars.selectors as csThere are a number of selectors you can use within select:

cs.starts_with("abc"): matches column names that begin with “abc”.cs.ends_with("xyz"): matches column names that end with “xyz”.cs.contains("ijk"): matches column names that contain “ijk”.cs.matches(r"\d{3}"): matches column names using regex, columns with three digits repeating in the name in this case.cs.temporal(): matches columns with temporal (time) data types.cs.string(): matches columns with string data types.

These are just a few, you can see all the selectors with examples in

the documentation, or by looking at the options after typing

cs. in your editor.

You can combine selectors with the following set

operations:

| Operation | Expression |

|---|---|

| UNION | A | B |

| INTERSECTION | A & B |

| DIFFERENCE | A - B |

| SYMMETRIC DIFFERENCE | A ^ B |

| COMPLEMENT | ~A |

flights.select(cs.temporal() | cs.string())| carrier | tailnum | origin | dest | time_hour |

|---|---|---|---|---|

| str | str | str | str | datetime[μs] |

| "UA" | "N14228" | "EWR" | "IAH" | 2013-01-01 10:00:00 |

| "UA" | "N24211" | "LGA" | "IAH" | 2013-01-01 10:00:00 |

| "AA" | "N619AA" | "JFK" | "MIA" | 2013-01-01 10:00:00 |

| "B6" | "N804JB" | "JFK" | "BQN" | 2013-01-01 10:00:00 |

| … | … | … | … | … |

| "9E" | null | "LGA" | "SYR" | 2013-10-01 02:00:00 |

| "MQ" | "N535MQ" | "LGA" | "BNA" | 2013-09-30 16:00:00 |

| "MQ" | "N511MQ" | "LGA" | "CLE" | 2013-09-30 15:00:00 |

| "MQ" | "N839MQ" | "LGA" | "RDU" | 2013-09-30 12:00:00 |

Note that both individual selector results and selector set operations will always return matching columns in the same order as the underlying DataFrame schema.

3.4 Groups

So far, we’ve learned about contexts (DataFrame methods) that work

with rows and columns. Polars gets even more powerful when

you add in the ability to work with groups. In this section, we’ll focus

on the most important contexts: DataFrame.group_by(),

DataFrame.agg(), and the various slice-ing

contexts.

3.4.1

DataFrame.group_by()

Use DataFrame.group_by()

to divide your dataset into groups meaningful for your analysis:

flights.group_by("month")<polars.dataframe.group_by.GroupBy at 0x1f439f85250>As you can see, DataFrame.group_by() doesn’t change the

data, but returns a GroupBy

object. This acts like a DataFrame, but subsequent

operations will now work “by month”, and comes with some extra

methods.

3.4.2

GroupBy.agg()

The most important grouped operation is an aggregation, which, if

being used to calculate a single summary statistic, reduces the data

frame to have a single row for each group. In Polars, this operation is

performed by GroupBy.agg(),

as shown by the following example, which computes the average departure

delay by month:

(

flights

.group_by("month")

.agg(avg_delay = pl.col("dep_delay").mean())

)| month | avg_delay |

|---|---|

| i64 | f64 |

| 1 | 10.036665 |

| 8 | 12.61104 |

| 12 | 16.576688 |

| 11 | 5.435362 |

| … | … |

| 6 | 20.846332 |

| 5 | 12.986859 |

| 3 | 13.227076 |

| 7 | 21.727787 |

There are two things to note:

- Polars automatically drops the missing values in

dep_delaywhen calculating the mean. - Polars has a few different methods called

mean(), but they do different things depending on root object (DataFrame,GroupBy,Expr, etc.)

You can create any number of aggregations in a single call to

GroupBy.agg(). You’ll learn various useful summaries in

later modules, but one very useful summary is pl.len(),

which returns the number of rows in each group:

(

flights

.group_by("month")

.agg(

avg_delay = pl.col("dep_delay").mean(),

n = pl.len()

)

.sort("month")

)| month | avg_delay | n |

|---|---|---|

| i64 | f64 | u32 |

| 1 | 10.036665 | 27004 |

| 2 | 10.816843 | 24951 |

| 3 | 13.227076 | 28834 |

| 4 | 13.938038 | 28330 |

| … | … | … |

| 9 | 6.722476 | 27574 |

| 10 | 6.243988 | 28889 |

| 11 | 5.435362 | 27268 |

| 12 | 16.576688 | 28135 |

Means and counts can get you a surprisingly long way in data science!

3.4.3 Slicing functions

There are a few ways Polars provides for you to extract specific rows within each group:

GroupBy.head(n = 1)takes the first row from each group. (Also works withDataFrame)GroupBy.tail(n = 1)takes the last row from each group. (Also works withDataFrame)

GroupBy also provides some powerful aggregations for

whole groups, like:

flights.group_by("dest").max() # shows max value for each group and column

flights.group_by("dest").min() # shows min value for each group and column| dest | year | month | day | dep_time | sched_dep_time | dep_delay | arr_time | sched_arr_time | arr_delay | carrier | flight | tailnum | origin | air_time | distance | hour | minute | time_hour |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| str | i64 | i64 | i64 | f64 | i64 | f64 | f64 | i64 | f64 | str | i64 | str | str | f64 | i64 | i64 | i64 | datetime[μs] |

| "BUR" | 2013 | 1 | 1 | 1325.0 | 1329 | -11.0 | 6.0 | 1640 | -61.0 | "B6" | 355 | "N503JB" | "JFK" | 293.0 | 2465 | 13 | 0 | 2013-01-01 18:00:00 |

| "SEA" | 2013 | 1 | 1 | 39.0 | 638 | -21.0 | 2.0 | 17 | -75.0 | "AA" | 5 | "N11206" | "EWR" | 275.0 | 2402 | 6 | 0 | 2013-01-01 12:00:00 |

| "DAY" | 2013 | 1 | 1 | 41.0 | 739 | -19.0 | 3.0 | 933 | -59.0 | "9E" | 3805 | "N10156" | "EWR" | 71.0 | 533 | 7 | 0 | 2013-01-01 17:00:00 |

| "LAX" | 2013 | 1 | 1 | 2.0 | 551 | -16.0 | 1.0 | 3 | -75.0 | "AA" | 1 | "N11206" | "EWR" | 275.0 | 2454 | 5 | 0 | 2013-01-01 11:00:00 |

| … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … |

| "ILM" | 2013 | 9 | 1 | 925.0 | 935 | -19.0 | 4.0 | 1143 | -49.0 | "EV" | 4885 | "N371CA" | "LGA" | 63.0 | 500 | 9 | 0 | 2013-09-07 13:00:00 |

| "TVC" | 2013 | 6 | 2 | 722.0 | 730 | -20.0 | 2.0 | 943 | -39.0 | "EV" | 3402 | "N11107" | "EWR" | 84.0 | 644 | 7 | 0 | 2013-06-14 22:00:00 |

| "OKC" | 2013 | 1 | 1 | 20.0 | 1630 | -13.0 | 3.0 | 1912 | -49.0 | "EV" | 4141 | "N10156" | "EWR" | 162.0 | 1325 | 16 | 0 | 2013-01-02 00:00:00 |

| "MKE" | 2013 | 1 | 1 | 14.0 | 559 | -20.0 | 1.0 | 720 | -63.0 | "9E" | 46 | "N10156" | "EWR" | 93.0 | 725 | 5 | 0 | 2013-01-01 12:00:00 |

If you want the top/bottom k number of rows (optionally

by group), use the DataFrame.top_k or

DataFrame.bottom_k contexts:

flights.top_k(k = 4, by = "arr_delay")

flights.bottom_k(k = 4, by = "arr_delay")| year | month | day | dep_time | sched_dep_time | dep_delay | arr_time | sched_arr_time | arr_delay | carrier | flight | tailnum | origin | dest | air_time | distance | hour | minute | time_hour |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| i64 | i64 | i64 | f64 | i64 | f64 | f64 | i64 | f64 | str | i64 | str | str | str | f64 | i64 | i64 | i64 | datetime[μs] |

| 2013 | 5 | 7 | 1715.0 | 1729 | -14.0 | 1944.0 | 2110 | -86.0 | "VX" | 193 | "N843VA" | "EWR" | "SFO" | 315.0 | 2565 | 17 | 29 | 2013-05-07 21:00:00 |

| 2013 | 5 | 20 | 719.0 | 735 | -16.0 | 951.0 | 1110 | -79.0 | "VX" | 11 | "N840VA" | "JFK" | "SFO" | 316.0 | 2586 | 7 | 35 | 2013-05-20 11:00:00 |

| 2013 | 5 | 2 | 1947.0 | 1949 | -2.0 | 2209.0 | 2324 | -75.0 | "UA" | 612 | "N851UA" | "EWR" | "LAX" | 300.0 | 2454 | 19 | 49 | 2013-05-02 23:00:00 |

| 2013 | 5 | 6 | 1826.0 | 1830 | -4.0 | 2045.0 | 2200 | -75.0 | "AA" | 269 | "N3KCAA" | "JFK" | "SEA" | 289.0 | 2422 | 18 | 30 | 2013-05-06 22:00:00 |

3.4.4 Grouping by multiple variables

You can create groups using more than one variable. For example, we could make a group for each date:

daily = flights.group_by(["year", "month", "day"])

daily.max()| year | month | day | dep_time | sched_dep_time | dep_delay | arr_time | sched_arr_time | arr_delay | carrier | flight | tailnum | origin | dest | air_time | distance | hour | minute | time_hour |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| i64 | i64 | i64 | f64 | i64 | f64 | f64 | i64 | f64 | str | i64 | str | str | str | f64 | i64 | i64 | i64 | datetime[μs] |

| 2013 | 9 | 22 | 2350.0 | 2359 | 239.0 | 2400.0 | 2359 | 232.0 | "YV" | 6181 | "N9EAMQ" | "LGA" | "XNA" | 626.0 | 4983 | 23 | 59 | 2013-09-23 03:00:00 |

| 2013 | 6 | 29 | 2342.0 | 2359 | 313.0 | 2358.0 | 2359 | 284.0 | "WN" | 6054 | "N999DN" | "LGA" | "TYS" | 605.0 | 4983 | 23 | 59 | 2013-06-30 03:00:00 |

| 2013 | 7 | 21 | 2356.0 | 2359 | 580.0 | 2400.0 | 2359 | 645.0 | "YV" | 6120 | "N9EAMQ" | "LGA" | "XNA" | 629.0 | 4983 | 23 | 59 | 2013-07-22 03:00:00 |

| 2013 | 9 | 4 | 2258.0 | 2359 | 296.0 | 2359.0 | 2359 | 148.0 | "YV" | 6101 | "N995AT" | "LGA" | "XNA" | 597.0 | 4983 | 23 | 59 | 2013-09-05 03:00:00 |

| … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … |

| 2013 | 5 | 9 | 2356.0 | 2359 | 504.0 | 2359.0 | 2359 | 493.0 | "YV" | 5769 | "N986DL" | "LGA" | "XNA" | 620.0 | 4983 | 23 | 59 | 2013-05-10 03:00:00 |

| 2013 | 2 | 13 | 2353.0 | 2359 | 592.0 | 2358.0 | 2359 | 595.0 | "YV" | 6055 | "N995AT" | "LGA" | "XNA" | 616.0 | 4983 | 23 | 59 | 2013-02-14 04:00:00 |

| 2013 | 2 | 28 | 2359.0 | 2359 | 168.0 | 2400.0 | 2358 | 145.0 | "YV" | 5739 | "N9EAMQ" | "LGA" | "XNA" | 612.0 | 4983 | 23 | 59 | 2013-03-01 04:00:00 |

| 2013 | 6 | 10 | 2352.0 | 2359 | 401.0 | 2359.0 | 2359 | 354.0 | "YV" | 6177 | "N9EAMQ" | "LGA" | "XNA" | 604.0 | 4983 | 23 | 59 | 2013-06-11 03:00:00 |

GroupBy methods return a DataFrame, so

there is no need to explicitly “un-group” your dataset.

3.5 Summary

In this chapter, you’ve learned the tools that Polars provides for

working with data frames. The tools are roughly grouped into three

categories: those that manipulate the rows (like

DataFrame.filter() and DataFrame.sort()) those

that manipulate the columns (like DataFrame.select() and

DataFrame.with_columns()) and those that manipulate groups

(like DataFrame.group_by() and GroupBy.agg()).

In this chapter, we’ve focused on these “whole data frame” tools, but

you haven’t yet learned much about what you can do with the individual

variable. We’ll return to that in a later module in the course, where

each section provides tools for a specific type of variable.

4 Data tidying

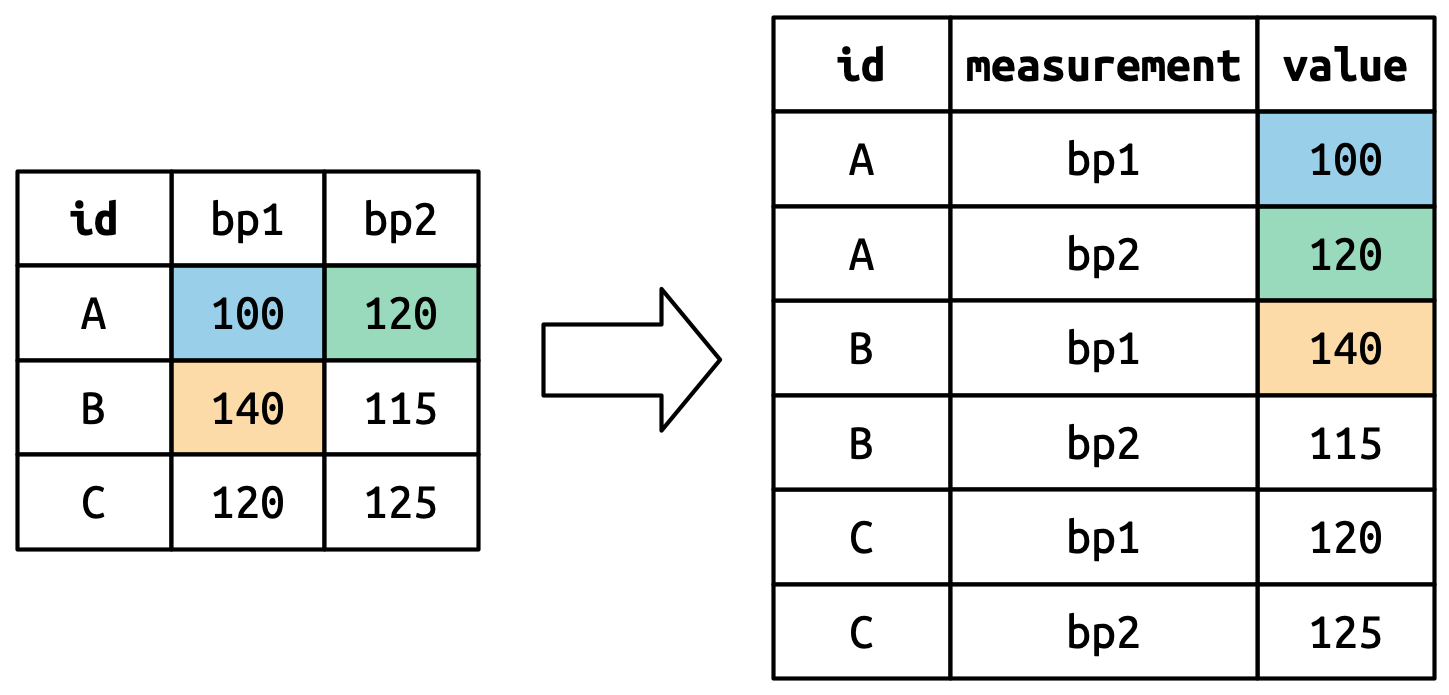

In this section, you will learn a consistent way to organize your data in Python using a system called tidy data. Getting your data into this format requires some work up front, but that work pays off in the long term. Once you have tidy data, you will spend much less time munging data from one representation to another, allowing you to spend more time on the data questions you care about.

You’ll first learn the definition of tidy data and see it applied to a simple toy dataset. Then we’ll dive into the primary tool you’ll use for tidying data: pivoting. Pivoting allows you to change the form of your data without changing any of the values.

4.1 Tidy data

You can represent the same underlying data in multiple ways. The

example below shows the same data organized in three different ways.

Each dataset shows the same values of four variables:

country, year, population, and

number of documented cases of TB (tuberculosis), but each

dataset organizes the values in a different way.

table1| country | year | cases | population |

|---|---|---|---|

| str | i64 | i64 | i64 |

| "Afghanistan" | 1999 | 745 | 19987071 |

| "Afghanistan" | 2000 | 2666 | 20595360 |

| "Brazil" | 1999 | 37737 | 172006362 |

| "Brazil" | 2000 | 80488 | 174504898 |

| "China" | 1999 | 212258 | 1272915272 |

| "China" | 2000 | 213766 | 1280428583 |

table2| country | year | type | count |

|---|---|---|---|

| str | i64 | str | i64 |

| "Afghanistan" | 1999 | "cases" | 745 |

| "Afghanistan" | 1999 | "population" | 19987071 |

| "Afghanistan" | 2000 | "cases" | 2666 |

| "Afghanistan" | 2000 | "population" | 20595360 |

| … | … | … | … |

| "China" | 1999 | "cases" | 212258 |

| "China" | 1999 | "population" | 1272915272 |

| "China" | 2000 | "cases" | 213766 |

| "China" | 2000 | "population" | 1280428583 |

table3| country | year | rate |

|---|---|---|

| str | i64 | str |

| "Afghanistan" | 1999 | "745/19987071" |

| "Afghanistan" | 2000 | "2666/20595360" |

| "Brazil" | 1999 | "37737/172006362" |

| "Brazil" | 2000 | "80488/174504898" |

| "China" | 1999 | "212258/1272915272" |

| "China" | 2000 | "213766/1280428583" |

These are all representations of the same underlying data, but they

are not equally easy to use. One of them, table1, will be

much easier to work with Polars & Plotly because it’s

tidy.

There are three interrelated rules that make a dataset tidy:

- Each variable is a column; each column is a variable.

- Each observation is a row; each row is an observation.

- Each value is a cell; each cell is a single value.

Why ensure that your data is tidy? There are two main advantages:

There’s a general advantage to picking one consistent way of storing data. If you have a consistent data structure, it’s easier to learn the tools that work with it because they have an underlying uniformity.

There’s a specific advantage to placing variables in columns because it allows Polar’s vectorized nature to shine. Plotly also works by default with tidy data formats, and requires (a little) workaround for wide formats.

Here are some examples of how Polars & Plotly work with tidy data:

# Compute rate per 10,000

table1.with_columns(

rate = pl.col("cases") / pl.col("population") * 1000

)| country | year | cases | population | rate |

|---|---|---|---|---|

| str | i64 | i64 | i64 | f64 |

| "Afghanistan" | 1999 | 745 | 19987071 | 0.037274 |

| "Afghanistan" | 2000 | 2666 | 20595360 | 0.129447 |

| "Brazil" | 1999 | 37737 | 172006362 | 0.219393 |

| "Brazil" | 2000 | 80488 | 174504898 | 0.461236 |

| "China" | 1999 | 212258 | 1272915272 | 0.16675 |

| "China" | 2000 | 213766 | 1280428583 | 0.166949 |

# Compute total cases per year

table1.group_by("year").agg(total_cases = pl.col("cases").sum())| year | total_cases |

|---|---|

| i64 | i64 |

| 1999 | 250740 |

| 2000 | 296920 |

# Visualize changes over time

table1_datefix = table1.with_columns(

date = (pl.col("year").cast(pl.String) + "-01-01").str.to_date()

)

px.line(

table1_datefix,

x="date",

y="cases",

color="country",

symbol="country",

title="Cases by Year and Country",

)While we are covering the basics of tidy data, I highly recommend reading Tidy Data by Hadley Wickham. It’s a short paper on the principles of working with tidy data and it’s benefits.

4.2 Lengthening data

The principles of tidy data might seem so obvious that you wonder if you’ll ever encounter a dataset that isn’t tidy. Unfortunately, however, most real data is untidy. There are two main reasons:

Data is often organized to facilitate some goal other than analysis. For example, it’s common for data to be structured to make data entry, not analysis, easy.

Most people aren’t familiar with the principles of tidy data, and it’s hard to derive them yourself unless you spend a lot of time working with data.

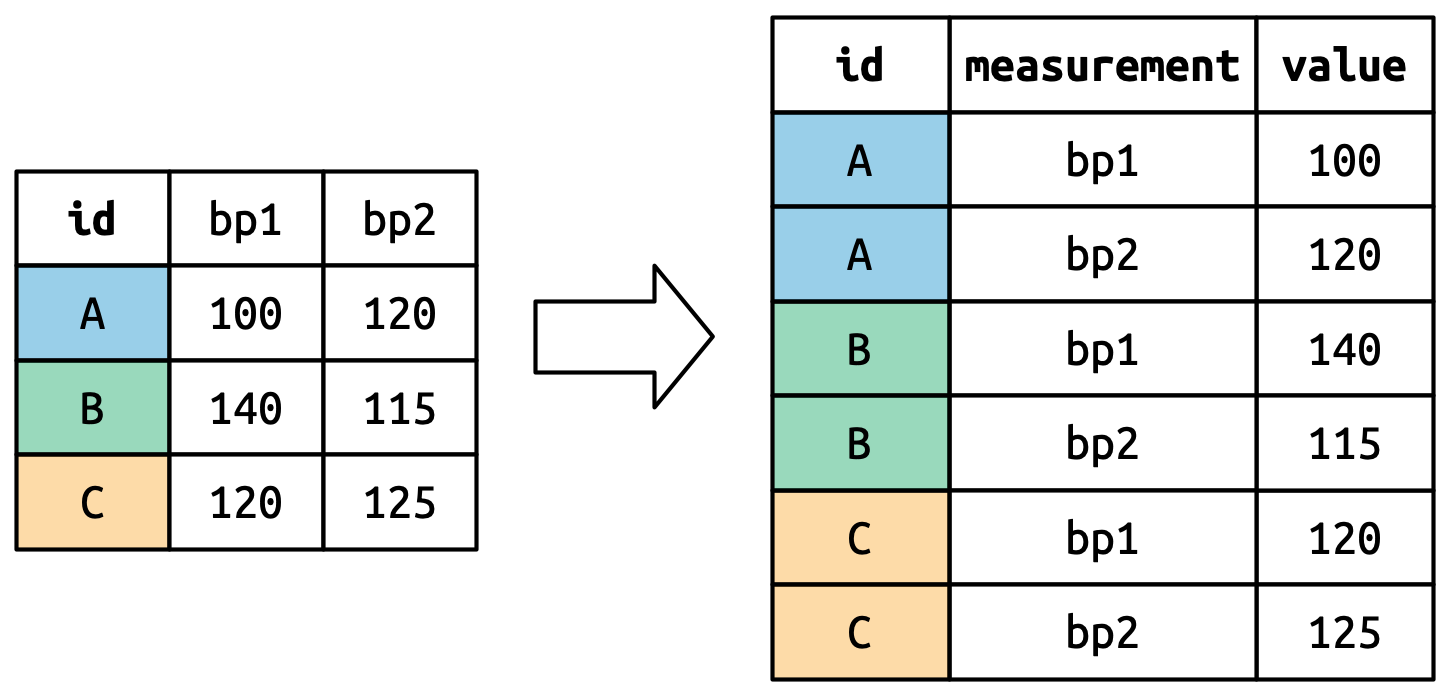

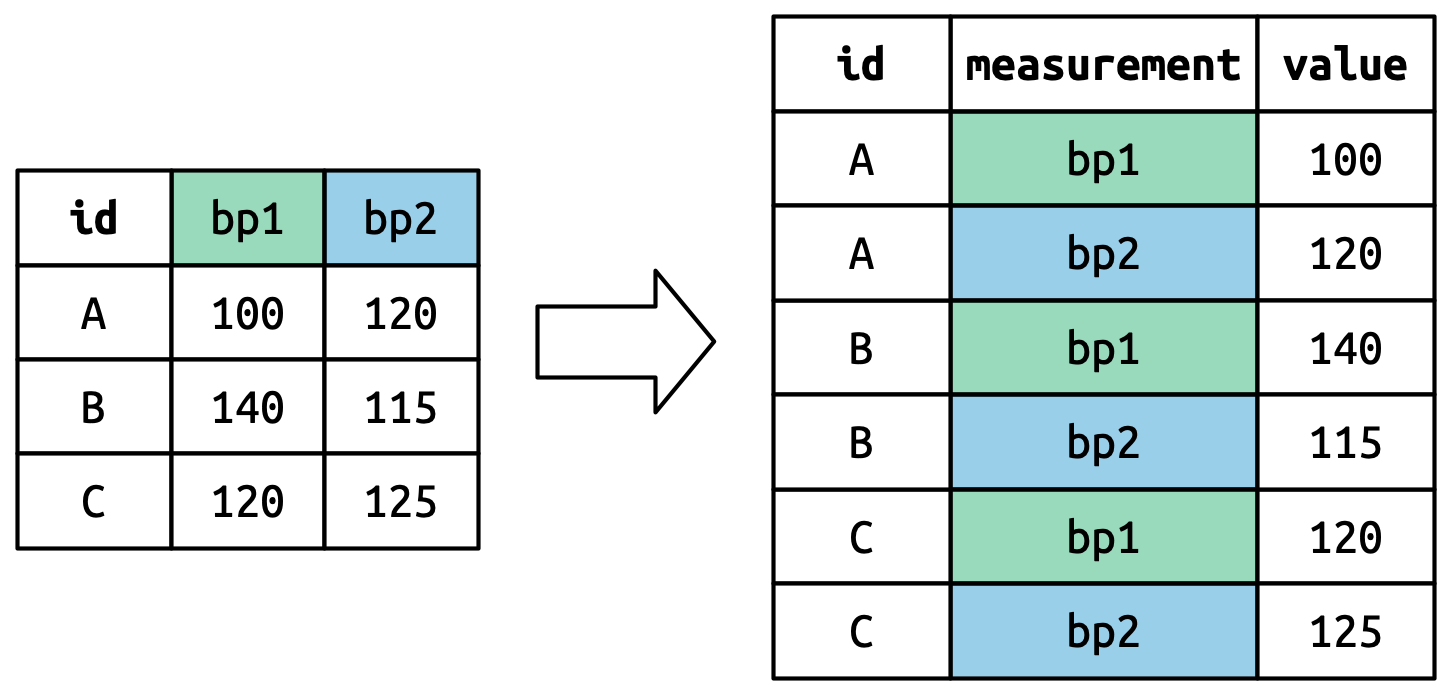

This means that most real analyses will require at least a little tidying. You’ll begin by figuring out what the underlying variables and observations are. Sometimes this is easy; other times you’ll need to consult with the people who originally generated the data. Next, you’ll pivot your data into a tidy form, with variables in the columns and observations in the rows.

Polars provides two methods for pivoting data:

DataFrame.pivot() and DataFrame.unpivot().

We’ll first start with DataFrame.unpivot() because it’s the

most common case. Let’s dive into some examples.

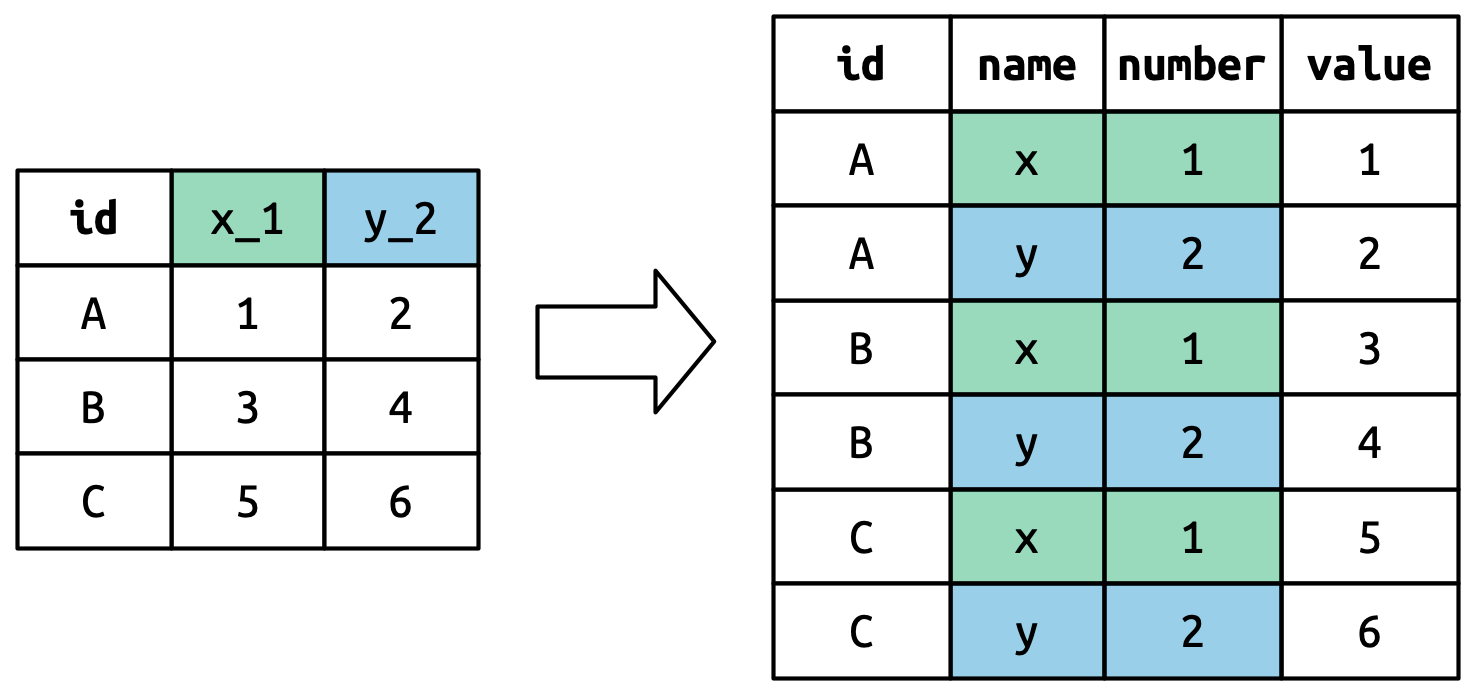

4.2.1 Data in column names

The billboard dataset records the billboard rank of

songs in the year 2000:

billboard = pl.read_csv("data/billboard.csv", try_parse_dates=True)

billboard| artist | track | date.entered | wk1 | wk2 | wk3 | wk4 | wk5 | wk6 | wk7 | wk8 | wk9 | wk10 | wk11 | wk12 | wk13 | wk14 | wk15 | wk16 | wk17 | wk18 | wk19 | wk20 | wk21 | wk22 | wk23 | wk24 | wk25 | wk26 | wk27 | wk28 | wk29 | wk30 | wk31 | wk32 | wk33 | wk34 | … | wk40 | wk41 | wk42 | wk43 | wk44 | wk45 | wk46 | wk47 | wk48 | wk49 | wk50 | wk51 | wk52 | wk53 | wk54 | wk55 | wk56 | wk57 | wk58 | wk59 | wk60 | wk61 | wk62 | wk63 | wk64 | wk65 | wk66 | wk67 | wk68 | wk69 | wk70 | wk71 | wk72 | wk73 | wk74 | wk75 | wk76 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| str | str | date | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | … | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | i64 | str | str | str | str | str | str | str | str | str | str | str |

| "2 Pac" | "Baby Don't Cry (Keep..." | 2000-02-26 | 87 | 82 | 72 | 77 | 87 | 94 | 99 | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | … | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null |

| "2Ge+her" | "The Hardest Part Of ..." | 2000-09-02 | 91 | 87 | 92 | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | … | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null |

| "3 Doors Down" | "Kryptonite" | 2000-04-08 | 81 | 70 | 68 | 67 | 66 | 57 | 54 | 53 | 51 | 51 | 51 | 51 | 47 | 44 | 38 | 28 | 22 | 18 | 18 | 14 | 12 | 7 | 6 | 6 | 6 | 5 | 5 | 4 | 4 | 4 | 4 | 3 | 3 | 3 | … | 15 | 14 | 13 | 14 | 16 | 17 | 21 | 22 | 24 | 28 | 33 | 42 | 42 | 49 | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null |

| "3 Doors Down" | "Loser" | 2000-10-21 | 76 | 76 | 72 | 69 | 67 | 65 | 55 | 59 | 62 | 61 | 61 | 59 | 61 | 66 | 72 | 76 | 75 | 67 | 73 | 70 | null | null | null | null | null | null | null | null | null | null | null | null | null | null | … | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null |

| … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … |

| "Yearwood, Trisha" | "Real Live Woman" | 2000-04-01 | 85 | 83 | 83 | 82 | 81 | 91 | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | … | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null |

| "Ying Yang Twins" | "Whistle While You Tw..." | 2000-03-18 | 95 | 94 | 91 | 85 | 84 | 78 | 74 | 78 | 85 | 89 | 97 | 96 | 99 | 99 | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | … | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null |

| "Zombie Nation" | "Kernkraft 400" | 2000-09-02 | 99 | 99 | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | … | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null |

| "matchbox twenty" | "Bent" | 2000-04-29 | 60 | 37 | 29 | 24 | 22 | 21 | 18 | 16 | 13 | 12 | 8 | 6 | 1 | 2 | 3 | 2 | 2 | 3 | 4 | 5 | 4 | 4 | 6 | 9 | 12 | 13 | 19 | 20 | 20 | 24 | 29 | 28 | 27 | 30 | … | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null | null |

In this dataset, each observation is a song. The first three columns

(artist, track, and date.entered)

are variables that describe the song. Then we have 76 columns,

wk1-wk76, that describe the rank of the song

in each week. Here, the column names are one variable (the

week) and the cell values are another (the

rank).

To tidy this data, we’ll use DataFrame.unpivot():

billboard.unpivot(

index=["artist", "track", "date.entered"],

variable_name="week",

value_name="rank"

)| artist | track | date.entered | week | rank |

|---|---|---|---|---|

| str | str | date | str | str |

| "2 Pac" | "Baby Don't Cry (Keep..." | 2000-02-26 | "wk1" | "87" |

| "2Ge+her" | "The Hardest Part Of ..." | 2000-09-02 | "wk1" | "91" |

| "3 Doors Down" | "Kryptonite" | 2000-04-08 | "wk1" | "81" |

| "3 Doors Down" | "Loser" | 2000-10-21 | "wk1" | "76" |

| … | … | … | … | … |

| "Yearwood, Trisha" | "Real Live Woman" | 2000-04-01 | "wk76" | null |